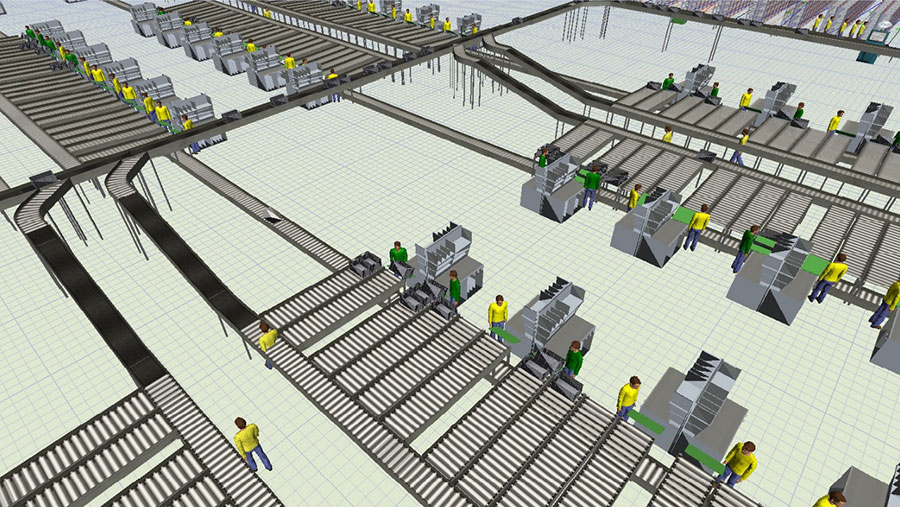

Sometimes you have to take a leap of faith when taking on a new project. Last year I had the opportunity to develop a FlexSim model of a 1,000,000 square-foot, multi-story distribution center operation. The client wanted a comprehensive model that could emulate all of their major operations. This included areas from receiving, put-away and picking to sorting, packaging and shipping. It was a major undertaking. Although I was comfortable completing the project, I was still nervous about FlexSim’s ability to effectively handle such a large system model.

Through this project, I gained a good understanding of some of the challenges in working with large FlexSim models. Here are some of the things I learned, along with the solutions I found.

System Memory is a Big Deal

Although FlexSim is a 64-bit software package, it is absolutely key to find ways to be “frugal” with your computer memory usage for large system models.

- I used data bundles versus tables, for example, to store model information. This helped considerably since data bundles use far less memory space than tables and can dynamically grow/shrink as necessary to hold the required data.

- An example of where this helped was in the storage of order wave information. At the beginning of a simulated day, the model would randomly generate up to 1.3MM lines (11 columns) of order information based upon user-defined order characteristics. Using a table to store this amount of data generated a huge memory overhead for the model.

- One thing to note is that it is more difficult to review data in bundles (vs. tables). One solution is to create scripts that will export the bundle data to an external file for review as needed.

Model Editing Challenges

Distribution Center Model Characteristics

- 90,000+ different SKU’s stored in +3MM rack locations

- 35,000+ orders to pick, sort, package and ship per day

- 5,000+ order sorting locations

- 1,200+ conveyors

- Areas Modeled: trailer receiving, inbound material operations, put-away, wave picking, staging, wave sorting, value-added services, outbound QA, manifesting, manual packaging, and shipping

It is progressively more difficult to make model changes as the model size/complexity grows.

- One thing that I did to counteract this potential issue was to create Object Groups for similar model elements. This enabled me to select and modify multiple elements simultaneously during the development and experiment phases of the project.

- In my model, for example, I had Object Groups for Order Sorting Locations (5,000+), Shipping Positions (350+), VAS Stations (350+) and Buffer Lanes (120+) which allowed me to quickly make changes based upon revised information.

- In addition to Object Groups, I also used User Commands extensively so that most of the model logic could be accessed/modified from a few places. This became an efficient way to debug the model during the development phase and modify the logic to run experiment alternatives towards the end of the project.

Comments, Comments, Comments

Finally, it’s more important than ever to use comments to document your model logic in a large system model. As complexity grows, it gets harder to remember how individual elements work together within the system.

In the end, the project was considered a success and a great investment by the client. The project team was able to use the model to validate the concept design performance over the planning period, identify opportunities for design improvement, and clarify/refine a shared understanding of how the system will actually work.